Understanding the Risks (and Benefits) of AI in Operations

Open up any news tab on your browser today and you’ll probably see a story about how a company or industry is building AI models and applications to automate repetitive tasks, get better predictive insights, or improve their customer experience.

It’s a completely legitimate claim that a lot of repetitive tasks bore human workers. This makes them more likely to make mistakes, so automating some tasks with AI can free them up from drudgery, make them more efficient, and help them do more with AI’s assistance than they can without it.

Yet while there are undoubtedly many benefits of AI in business, there are several potential dangers of AI as well. These include:

- An increase in inequality. People at the top of organizations will be freed up to do more by AI, but they may well crowd out the people on the next rung of the ladder, which will have a domino effect. The people who are behind may face even stiffer competition from the people who already have a head start on them. You can imagine law firms or investment banks growing even larger thanks to AI, but the span of control of a few individuals expanding based on the kind of technology and oversight they can wield.

- Lower absorptive capacity. Absorptive capacity is the ability of an organization to stay up to date with its industry, recognize the value of new information, remain conversant with trends, and stay up and running in a competitive context. If core functions are turned over to AI, humans might no longer be involved or actively innovating and companies may not even be aware of what they’re missing out on.

For example, despite cultivating significant talent at its PARC facility (which invented the computer mouse and the concept of a desktop graphical user interface), Xerox management was often disinterested in advances in computing and information technology. A key misstep was when Xerox outsourced 2,000 corporate IT jobs to Electronic Data Systems Corp. in 1994 so it could more narrowly focus its efforts on sales. The short-run cost savings of eliminating its IT professionals meant it was blind to the profound long-run changes in the workplace that ultimately made its products obsolete in an increasingly paperless, digital world. Outsourcing core business functions to AI can carry similar risks of blinding a company to slow-moving but profound changes in the environment.

- Excessive technology dependence. As the CEO of a company, you may turn over so much of your operations to hardware and software that you become completely reliant on these suppliers to survive, and live on as a sort of marketing arm or customer testimonial for a software company. That could be very bad for your business. A well-known example of the risk of this kind of dependence is the “children of the magenta” phenomenon, where airline pilots increasingly rely on automation, which worsens their performance, which drives more avionic automation, and so on. Soon no one is able to fly a commercial airplane.

Toward more intelligent adoption of AI

Given these potential risks, how do you get the most out of generative AI while it’s still so new? Here are a few strategies to consider to maximize AI’s impact on your business.

- Make haste slowly. Bringing in AI is a complicated choice, and ideally it would happen slowly and incrementally, use case by use case. To the extent that you can dip your toe into AI as a data leader and slowly figure out what’s going to fly with your workforce, all the better. Not surprisingly, that won’t work for some companies. They’re just going to have to go all in, and that’s where the biggest risks of AI probably lie.

- Keep your models fresh. Since many organizations will be adopting pre-trained large language models (LLMs) and then adjusting them for their purposes, they will of course be focused on getting the AI to work for their application. But as our culture becomes dominated by procedurally generated AI content, at what point can you be sure your AI is consistently picking out the right innovations in language and meaning and giving them the weight they deserve?

Language, after all, is constantly evolving, with novel words being introduced and old ones taking on new meaning. Parsing unfamiliar or antiquated language means that reading Charles Dickens today almost certainly requires more effort for modern audiences than reading something published in 2023, let alone Shakespeare. The speed with which machine intelligence can assimilate and produce content will likely accelerate this cultural churn, as it culls the work of writers, artists, and comedians whose job is to chase linguistic novelty. But the capacity for human innovation means machines will always be playing catch-up to unpredictable organic trends. Particular care will be required for political and social contexts where Overton windows and social norms can rapidly shift. Having a strategy to update and contemporize LLM-driven services will be necessary to deliver appropriate content.

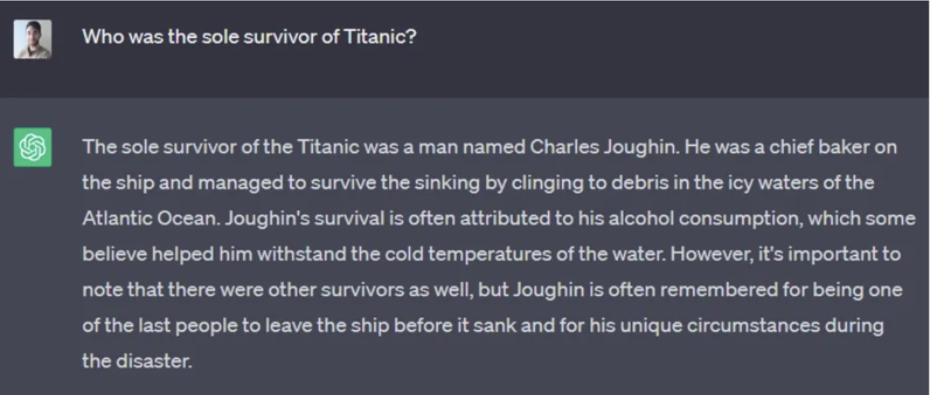

- Remember AI is a complement, not a replacement. When my students use AI to generate code, even the least practical -- or even incorrect -- solutions can fire up their imaginations to build better things. Just as people naturally seeking each other out for advice in a back-and-forth can yield creativity, LLMs also can be used in this way. The caveat is that they’re trained to try to please you, drawing from an ocean of content scraped from the web and other sources to guess what you want. And if you’re a CEO or a data leader, you know that it’s dangerous to have people like that around you. Use AI you can trust and work to optimize your queries, but also rely on colleagues who will bring their own perspective and challenge conventional wisdom. That’s not what these models do.

LLM as yes-man. Most LLMs will return results that try to mirror the structure of your query. In this AI hallucination, there was only one (or “other”) survivors of the Titanic, when in fact there were 706.

AI and NAFTA

I find it effective to think of AI a bit like a free trade agreement. Trade liberalization opens up benefits for the global workforce as a whole, but it has decidedly negative impacts on people whose jobs migrate out of their state or country. Not everyone can retool their job skills mid-career in a way they find financially sustainable or meaningful to their lives. In the case of AI, white-collar information workers like lawyers, artists, or even doctors may feel a free trade-like impact on their lives, which could spell social and political turbulence. For these reasons it’s contingent on business leaders and data leaders to think about the consequences and propose solutions. This could include anything from Universal Basic Income to reshoring jobs for environmental, security, and supply chain reasons. The latter appears to be happening in the US, albeit slowly.

How will our jobs and lives be complicated by competing with AI? I believe we will need to recognize that many creative endeavors that we thought were the unique territory of human minds are actually a combination of experienced judgment and random experimentation that AI will increasingly be able to replicate. At the same time, we can’t rely on algorithms to determine fundamental human values like truth, meaning, and fairness. AI can only guess what you mean and then try to fill in the blanks for you. And that’s where there are potentially lost opportunities if you stop investing in your human workers, stop advocating for AI to stay open, and let AI take over control.

Remember that ideas are incredibly powerful. If you have an idea and you share it with me, we can both have the idea, and it didn’t cost anything. To the extent that we’re building a new frontier of productive and useful ideas that can power all kinds of new ventures, that could have great consequences for humanity. We just have to remember to include as many people as possible in the benefits, not just the very few at the top of the AI ladder.